An Overview of Floating Point Equality

As software engineers, particularly in the test and measurement industry, the singular purpose for many of our programs is determining whether a given DUT has passed or failed. How do we do this? Well, we measure things: voltages, currents, resistances, temperatures… the list goes on and on. But the measurements themselves aren’t sufficient. We need some form limits to compare them to. Is Vout >= 3.0 V and <= 5.0 V?

We do that all day… it’s trivial, right?

Well, no, not really. See, those equal signs and that decimal point there? They should raise big danger signs and cause alarm bells to go off in your head. A while back, I literally had to prove that 0.005 GHz was equivalent to 5 MHz. What should have been a split-second calculation ended up taking a bit longer to work out.

To understand why, let’s first look at the way floating point numbers are implemented in software (as dictated by IEEE 754 standard). Let’s look specifically at (32-bit) singles. Doubles (64-bits) are similar, but can more precisely represent the numbers, given that there are more bits used.

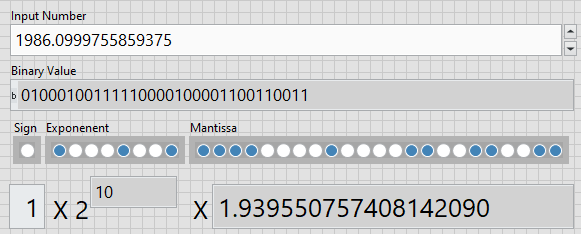

Every number is broken in to three fields… a sign, mantissa, and exponent:

- The sign, as the name implies, simply indicates whether the number is negative or positive. This uses 1 bit.

- The mantissa represents the actual digits in the number. This uses 23 bits.

- The exponent is the (base 2) exponent to which the mantissa must be multiplied to get the true value. This uses 8 bits.

First, let’s look at a simple case. Let’s say we have 256.0 for our value. Broken out into its parts, we have a mantissa of 1.0 and an exponent of 8. With a little math, 1.0 * 2^8 is 256, exactly. All is well.

Let’s look at something a little more complex, however. Let’s look at the number 14.141. This gives us a mantissa of 1.767624974250793460 and an exponent of 3. Doing some math, 1.767… * 2^3 turns out to be 14.1409997940063476 which, for most normal purposes, is 14.141 and is, in fact, the closest we can ever come to representing 14.141 using the single datatype.

Getting back to my real-world example, let’s take 0.005 GHz and convert it to Hz:

0.005 * 1e9 = 5000000, right? Not exactly. First, let’s look at datatypes. In my case 0.005 was a single, but for the sake of accuracy (more bits are always better, right?) I made my 1e9 constant a double. 0.005 as a single is actually 1.27999997138977051 * 2^-8. Multiplied by 1.86264514923095703 *2^29, you get 1.1920928955078125 * 2^22. That, my friends, is 4999999.88824129105 Hz, and not 5 MHz.

Now, let’s evaluate the assumption that “more bits are always better.” In this case, because I multiplied a single by a double, the output was now a double. Furthermore, while 1.27999997138977051 * 2^-8 is the closest approximation one can get to 0.005 when using a single, we can get much more precise when using a double. 1.27999997138977051 * 2^-8 actually comes out to 0.00390625 as a double, and our additional precision has just led us off the deep end. Had we used a single for our 1e9 multiplier, we would have ended up with the correct value: 1.1920928955078125 * 2^22 = 5,000,000. To be clear, that’s a less precise answer, that just rounds to the desired number.

Let’s look at one more case: Is 1.9 + 0.1 equal to 2.1 – 0.1? We’ll keep our datatypes consistent this time, but we will use singles to illustrate the point more clearly:

1.89999997615814209 * 2^0 + 1.600000023841857910 * 2^-4 = 1.0 * 2^1 = 2.0

1.049999952316284180 * 2^1 – 1.600000023841857910 * 2^-4 = 1.99999988079071045

So, 2.1 – 0.1 is not equal to 1.9 + 0.1, even when sticking with consistent representation. How do we handle that scenario? Now that we understand the underlying problem, I’ll discuss the correct way to do floating point equality comparisons in my next blog post. Stay tuned…

Never miss a post. Subscriber to the Hiller blog by entering your email address in the form to the right.